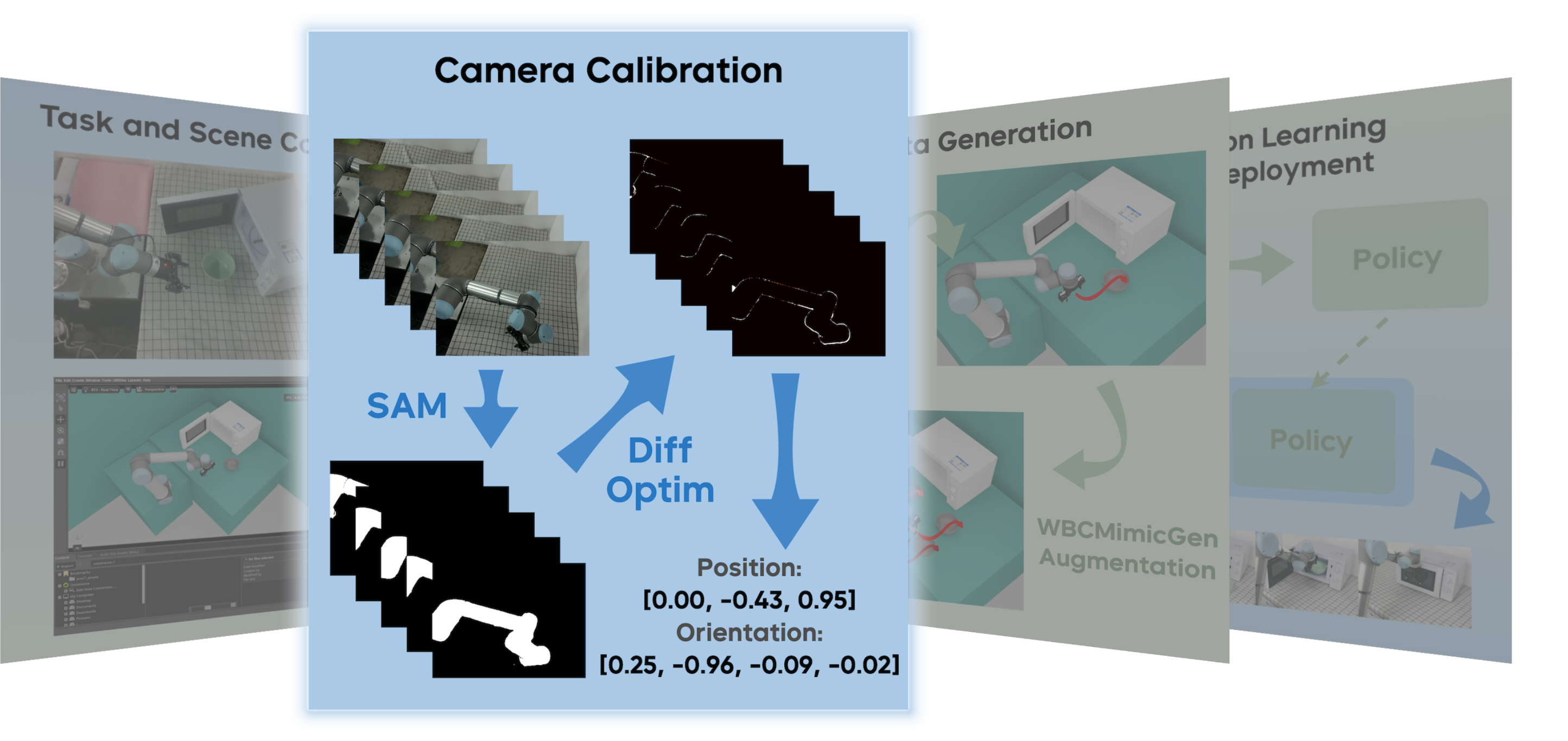

Stage 2: Camera Calibration

Overview

Camera calibration establishes geometric correspondence between simulated and physical visual observations—a prerequisite for vision-based policy transfer. Our approach employs differentiable rendering within an optimization framework to automatically determine camera parameters that minimize simulation-to-reality discrepancies. The methodology encompasses multi-view data acquisition across diverse robot configurations, segmentation via Segment Anything Model (SAM), and gradient-based optimization of camera extrinsics to achieve pixel-level alignment between rendered and observed masks.

Calibration Workflow

Step 1: Data Collection

Calibration data acquisition protocol:

-

Trajectory Design

- Define joint configurations spanning the operational workspace

- Ensure mechanical equilibrium before data capture

- Log kinematic state with sub-degree precision

-

Synchronized Acquisition

Per configuration:

- Command target joint state

- Allow settling time (≥0.5s)

- Capture synchronized data:

- Visual observations

- Joint encoders

- Temporal stamps

-

Quality Assurance

- Validate image sharpness and absence of motion artifacts

- Verify encoder precision

- Confirm temporal alignment

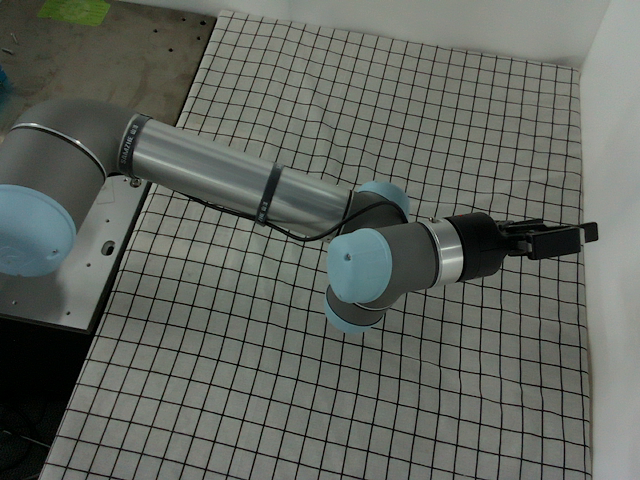

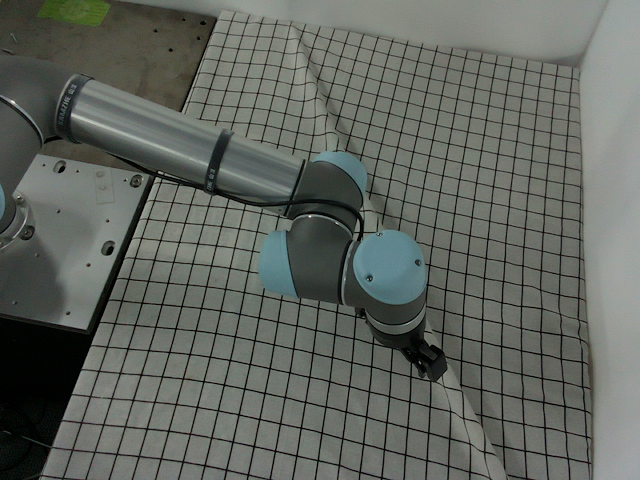

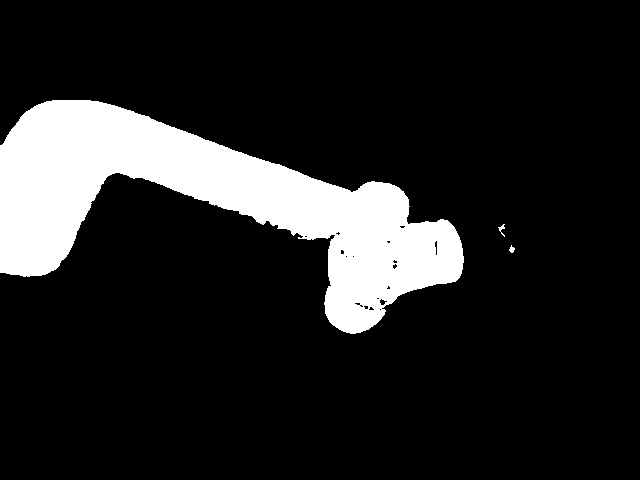

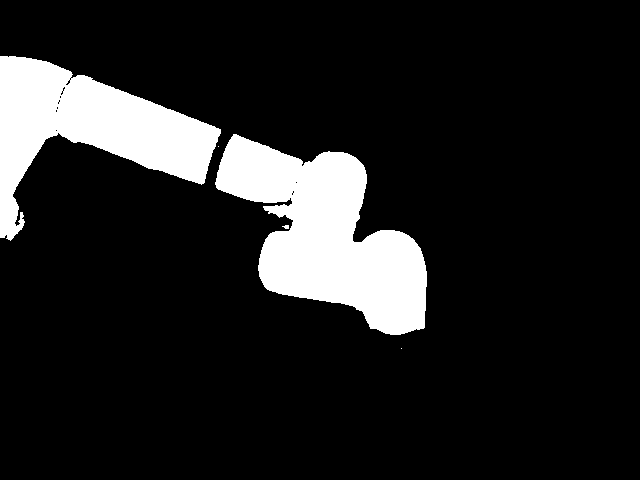

Data Collection - RGB Camera Calibration Frames

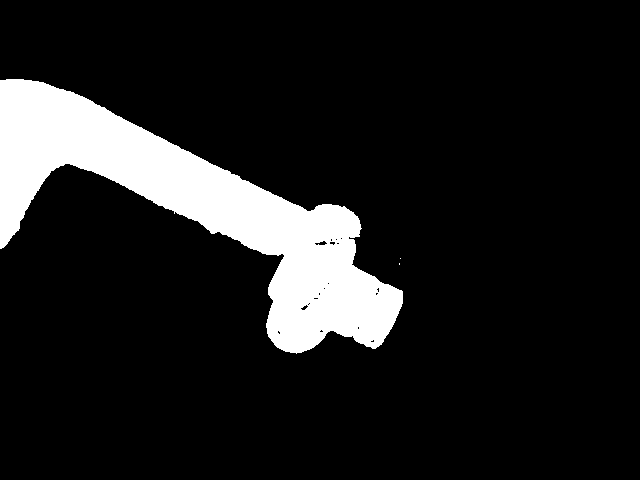

Step 2: Mask Generation

Automated segmentation via SAM:

Data Collection - Masks of real images

Step 3: Optimization Solving

Parameter optimization procedure:

-

Initialization

- Extrinsic position: Coarse measurements

- Orientation: Workspace-centered

- Intrinsics: Manufacturer specifications or pre-calibrated

-

Gradient-Based Optimization

- Update simulation camera parameters

- Render masks across all configurations

- Compute L1 norm between rendered and observed masks

- Backpropagate gradients through differentiable renderer

- Update parameters via gradient descent

- Iterate to convergence

-

Termination Conditions

- Loss plateau (Δ < ε)

- Parameter convergence (||∇θ|| → 0)

- Iteration limit

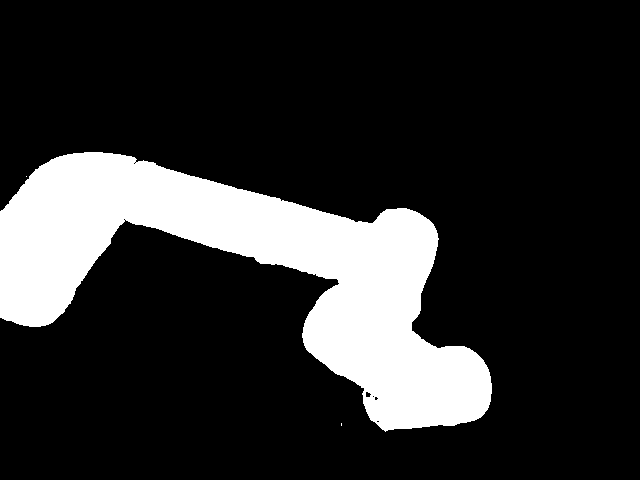

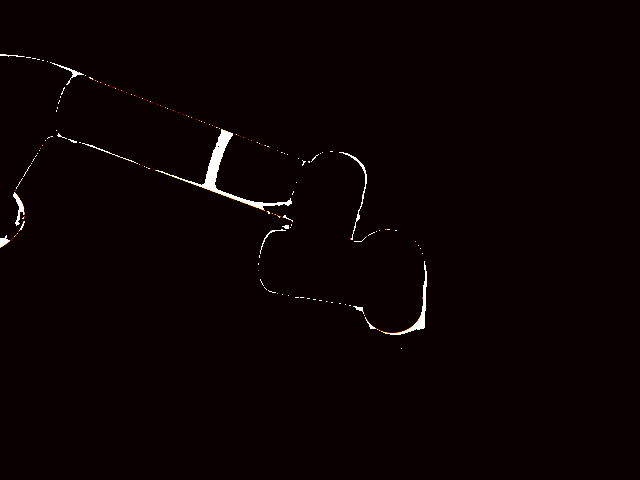

Data Collection - Error of masks after optimization

Advanced Techniques

Improving Calibration Accuracy

- Dense Sampling: Increase configuration density in task-critical regions

- Hierarchical Refinement: Initialize fine-grained optimization with coarse calibration results

Code Reference

Implementation references:

Data Collection Tools:

- File path:

https://github.com/Universal-Control/ManiUniCon/blob/main/tools/calibration/save_diff_optim_data.py - Calibration data acquisition and persistence

Calibration Optimization Script:

- File path:

https://github.com/Universal-Control/ManiUniCon/blob/main/tools/calibration/diff_optim_camera_params.py - SAM-based segmentation and differentiable optimization pipeline

Calibration Data Management

Optimal calibration requires configuration sets that maximize pose diversity while maintaining visibility across the camera's field of view. Joint configurations should exhibit maximal variance to ensure robust parameter estimation.

Store selected configurations in tools/calibration/calibration_data/{setup_name}/joints.txt. Example structure for ur5_d435:

Joint configuration format (radians):

[-3.124601, -1.630071, -1.790162, -1.297886, 1.560076, -0.423495]

[-3.258589, -2.106229, -1.353434, 0.375175, 1.814344, -0.502429]

[-2.698257, -2.036311, -1.348458, 0.769870, 1.861926, -0.439289]

[-2.950685, -2.074254, -1.298800, -1.055511, 0.241022, -0.498035]

[-2.992676, -1.901685, -0.997740, -0.726964, 1.578677, -0.399332]

[-2.629278, -1.849604, -1.016632, -1.474060, 2.517711, -0.396734]

[-2.941660, -1.930229, -1.653374, -0.604498, 0.486982, -0.462255]

[-2.450230, -2.012307, -1.765171, 0.477528, 0.984876, 0.714768]

[-2.872846, -2.243410, -1.031999, 1.257755, 1.437975, 0.839455]

Post-acquisition data structure:

ManiUniCon/

├── tools/

│ └── calibration/

│ └── ur5_d435/

│ └── joints.txt

│ └── multi_frame_calibration_data.npz # key: "rgb_images", "captured_joint_values", "target_joint_values"

Optimized parameters are output to terminal upon convergence.

Next Steps

Calibrated camera parameters enable accurate visual correspondence for Stage 3: leveraging the aligned simulation environment for large-scale data generation via teleoperation and augmentation strategies.