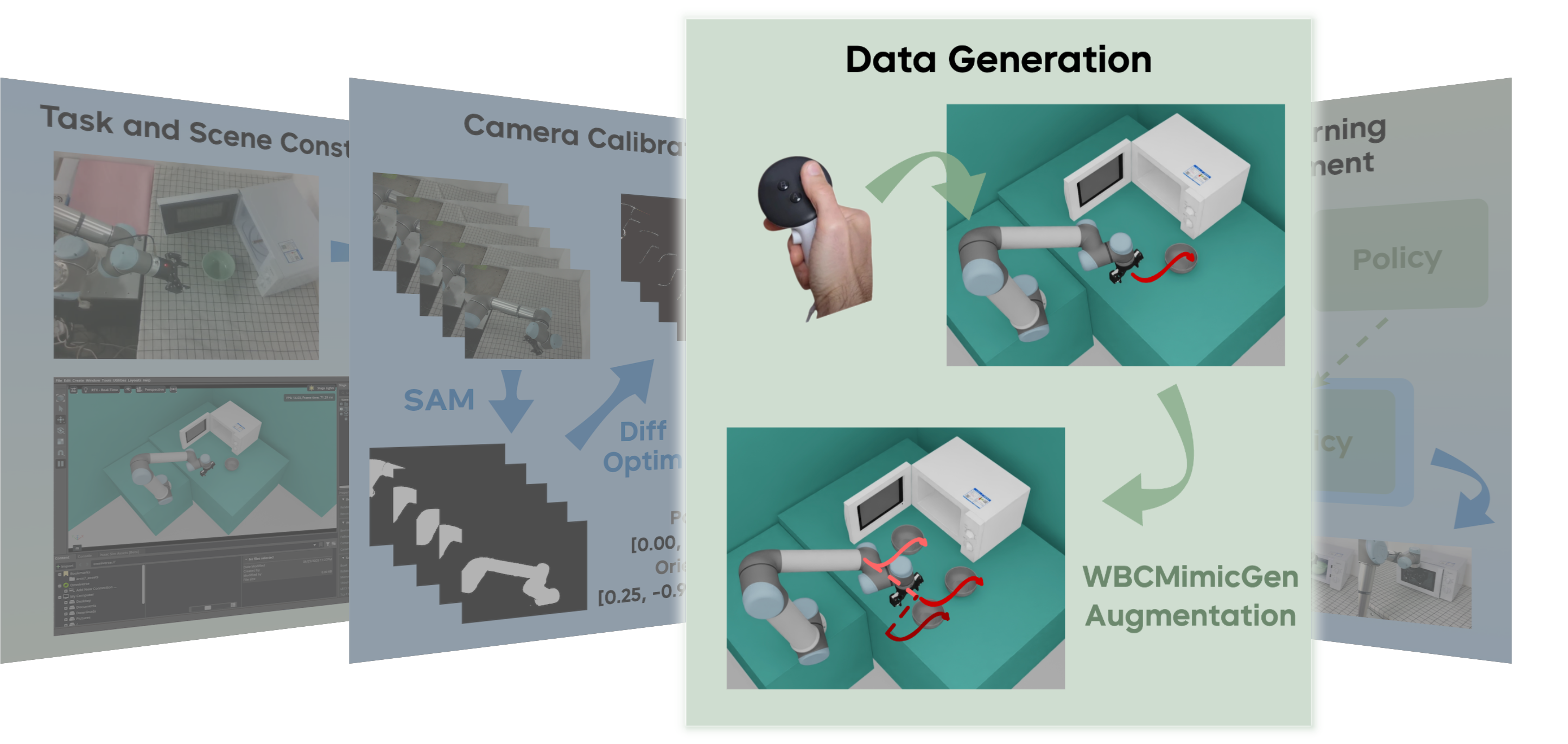

Stage 3: Data Generation with WBCMimicGen

Overview

Data generation for robotic manipulation encompasses multiple approaches, each with distinct advantages and limitations. Motion planning excels at atomic task execution but requires extensive manual implementation for complex sequences. Reinforcement learning addresses highly dynamic tasks with fine-grained control requirements, though reward engineering remains challenging. MimicGen-based methods bridge this gap by leveraging human demonstrations for contact-rich manipulation while achieving the requisite scale for effective learning, particularly in long-horizon tasks. However, direct teleoperation in simulation often produces discontinuous trajectories. To address this limitation, we integrate Whole Body Control (WBC) into the MimicGen framework, yielding WBCMimicGen—a method that generates smooth trajectories while enabling coordinated control of multi-arm mobile manipulators. Implementation details are provided below.

Our approach demonstrates significant data amplification: from 3-5 human demonstrations per task, we generate thousands of trajectory variants with diverse object configurations, as exemplified in the kitchen and canteen scenarios.

Data Collection

WBCMimicGen begins with human demonstration collection via a VR-based teleoperation system, as illustrated below:

Representative demonstrations from both task domains:

Task Decomposition and Annotation

WBCMimicGen decomposes trajectories into object-centric subtasks and applies spatial transformations to generate diverse training scenarios. This hierarchical decomposition follows task semantics and requires either manual or automated annotation of trajectory segments to encode subtask boundaries and objectives.

We illustrate the decomposition strategy through two representative examples:

Kitchen task example

Kitchen task decomposition:

Stage 1: grasp bowl

- Start: Robot initial position

- Goal: Bowl stably grasped

Stage 2: place in microwave

- Start: Bowl grasped

- Goal: Bowl placed inside microwave

Stage 3: close microwave door

- Start: Bowl inside microwave

- Goal: Microwave door closed

Canteen task example

Canteen task decomposition:

Stage 1: grasp fork

- Start: Robot initial position

- Goal: Fork stably grasped

Stage 2: place fork

- Start: Fork stably grasped

- Goal: Fork placed in the blanket

Stage 3: grasp plate

- Start: Fork placed in the blanket

- Goal: Plate stably grasped

Stage 4: Drop plate

- Start: Plate stably grasped

- Goal: Drop above the left blanket

Stage 5: Place plate

- Start: Drop above the left blanket

- Goal: Plate placed in the blanket

Large-scale Data Generation

Following task decomposition and annotation, WBCMimicGen generates large-scale training data with systematic variations:

Detail of WBCMimicGen

WBCMimicGen extends the MimicGen framework through whole-body control optimization:

Unlike standard MimicGen's linear interpolation of end-effector poses, WBCMimicGen formulates trajectory generation as a quadratic programming (QP) problem that optimizes joint velocities while respecting manipulability constraints, joint limits, and velocity bounds. This approach yields smoother, dynamically feasible demonstrations. Formally, the trajectory generation problem seeks joint velocities $\boldsymbol x$ that track desired end-effector velocities, formulated as[1]:

where $x = (a_\text{base}, \dot{q}_\text{active}, \delta_1, \delta_2, \cdots, \delta_i)$ and $\mathcal{X}^{+, -}$ is the limits; $a_\text{base}$ is the velocities of the robot base; $\dot{q}_\text{active}$ is the velocity of the joints related to the end-effectors in the QP (so called the active joints); $\delta_i$ are slack variables that can help construct a solvable QP. Without loss of generality, suppose there are $k$ end-effectors and $n$ joints, these variables can be expressed as:

Comparative analysis demonstrates WBCMimicGen's superior trajectory smoothness (right) versus standard MimicGen (left):

Kitchen task's data generated by MimicGen

Kitchen task's data generated by WBCMimicGen

Canteen task's data generated by MimicGen

Canteen task's data generated by WBCMimicGen

Data Generation of Mobile Manipulator

A key innovation of WBCMimicGen is its extension to mobile manipulation. The following demonstration shows coordinated base-arm control on the ARX7 platform executing a multi-stage task: grasping toothpaste, placing it in a cup, and repositioning the cup:

Camera on the robot's head.

Camera in the environment.

Code Reference

Data generation related code:

Teleoperation System:

- Quest VR control:

https://github.com/ByteDance-Seed/manip-as-in-sim-suite/blob/main/wbcmimic/scripts/basic/record_demos_ur5_quest.py

Data Annotation Scripts:

Data Generation Scripts:

- Generation configuration:

https://github.com/ByteDance-Seed/manip-as-in-sim-suite/blob/main/wbcmimic/config/mimicgen/generate_data.yaml - Generation script:

https://github.com/ByteDance-Seed/manip-as-in-sim-suite/blob/main/wbcmimic/scripts/mimicgen/run_hydra.py

Next Steps

The generated dataset enables subsequent imitation learning for policy training and real-world deployment, detailed in Stage 4.

References

[1] Jesse Haviland, Niko Sünderhauf, and Peter Corke. A holistic approach to reactive mobile manipulation. IEEE Robotics and Automation Letters, 7(2):3122–3129, 2022.