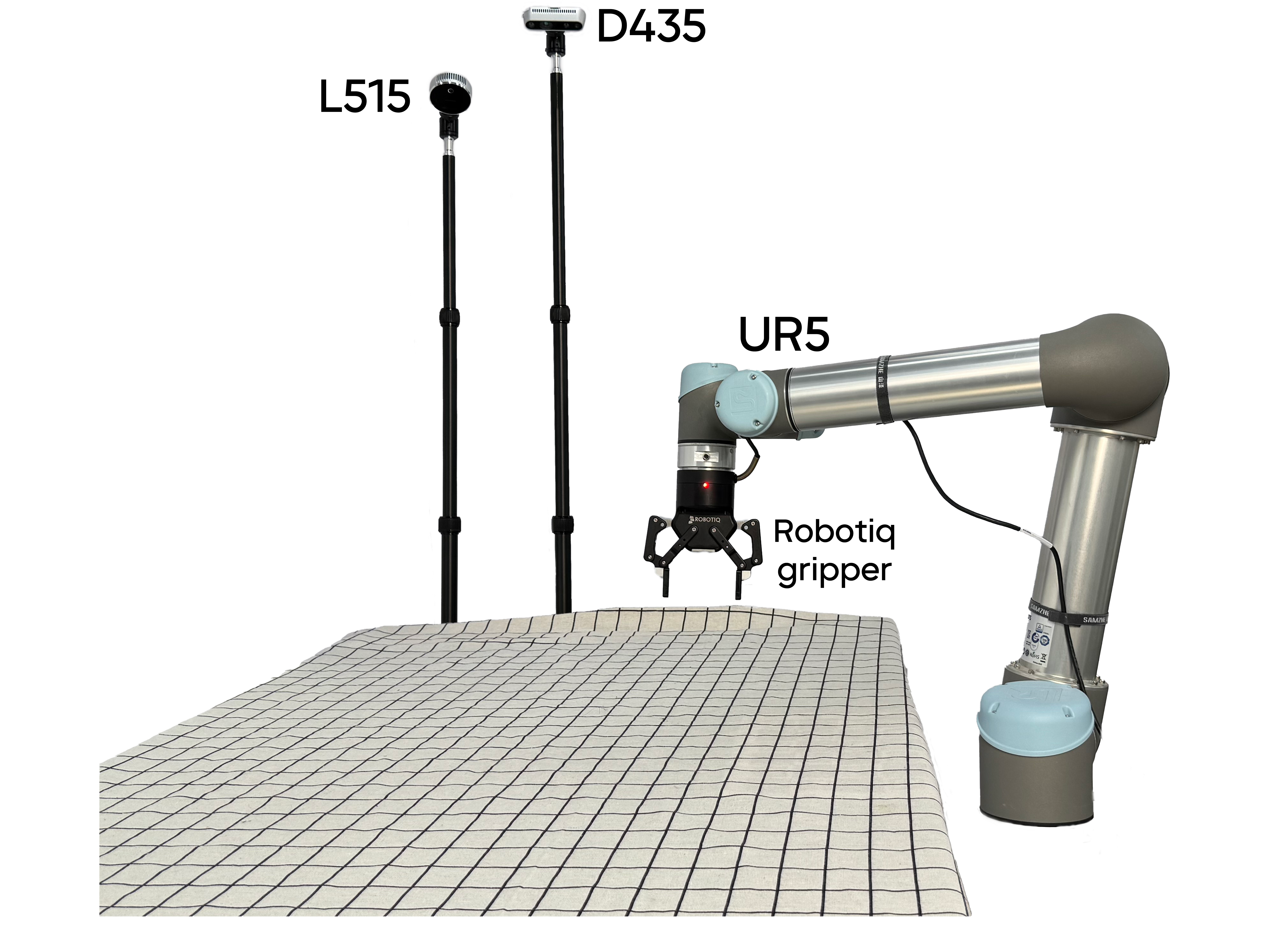

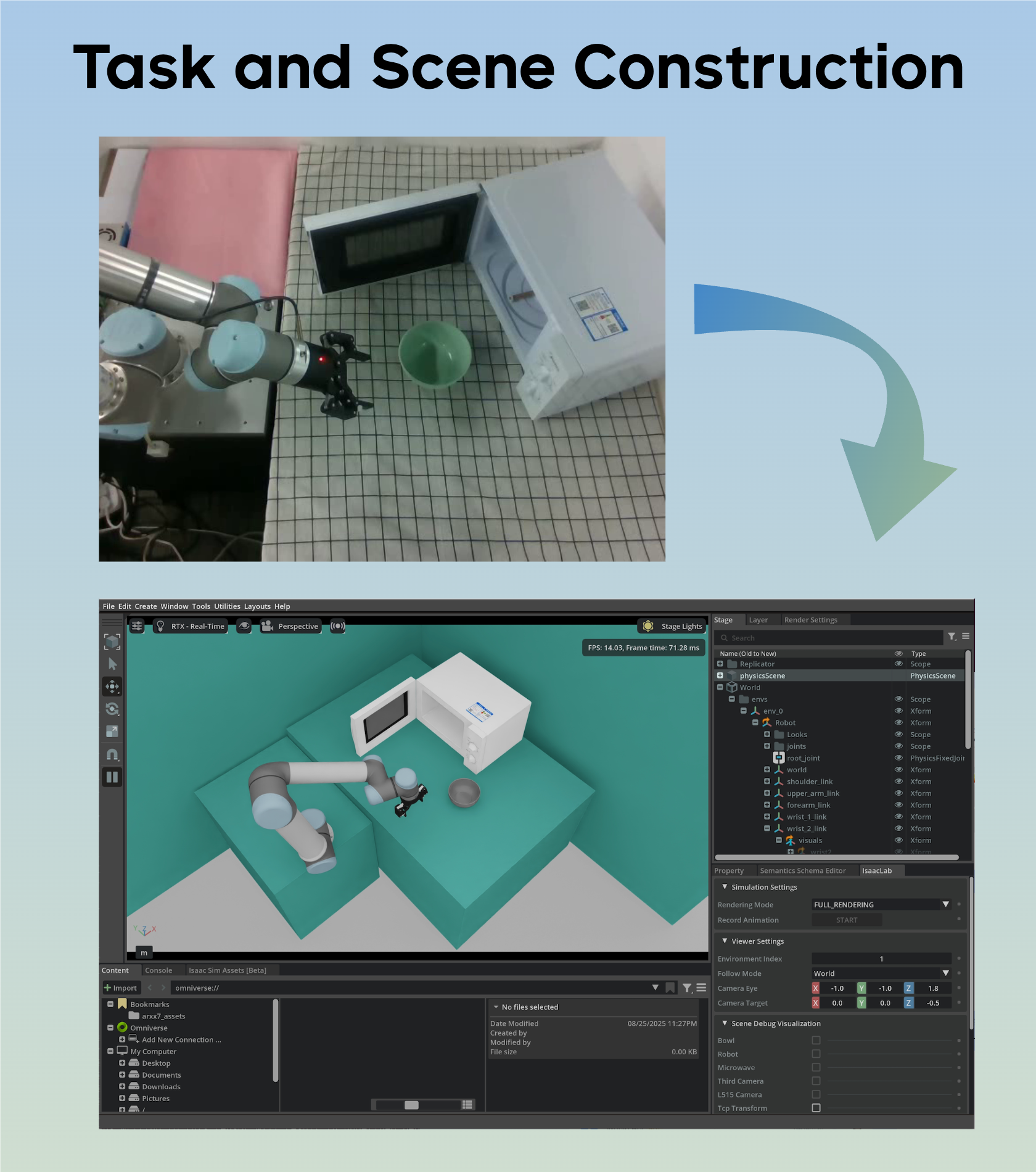

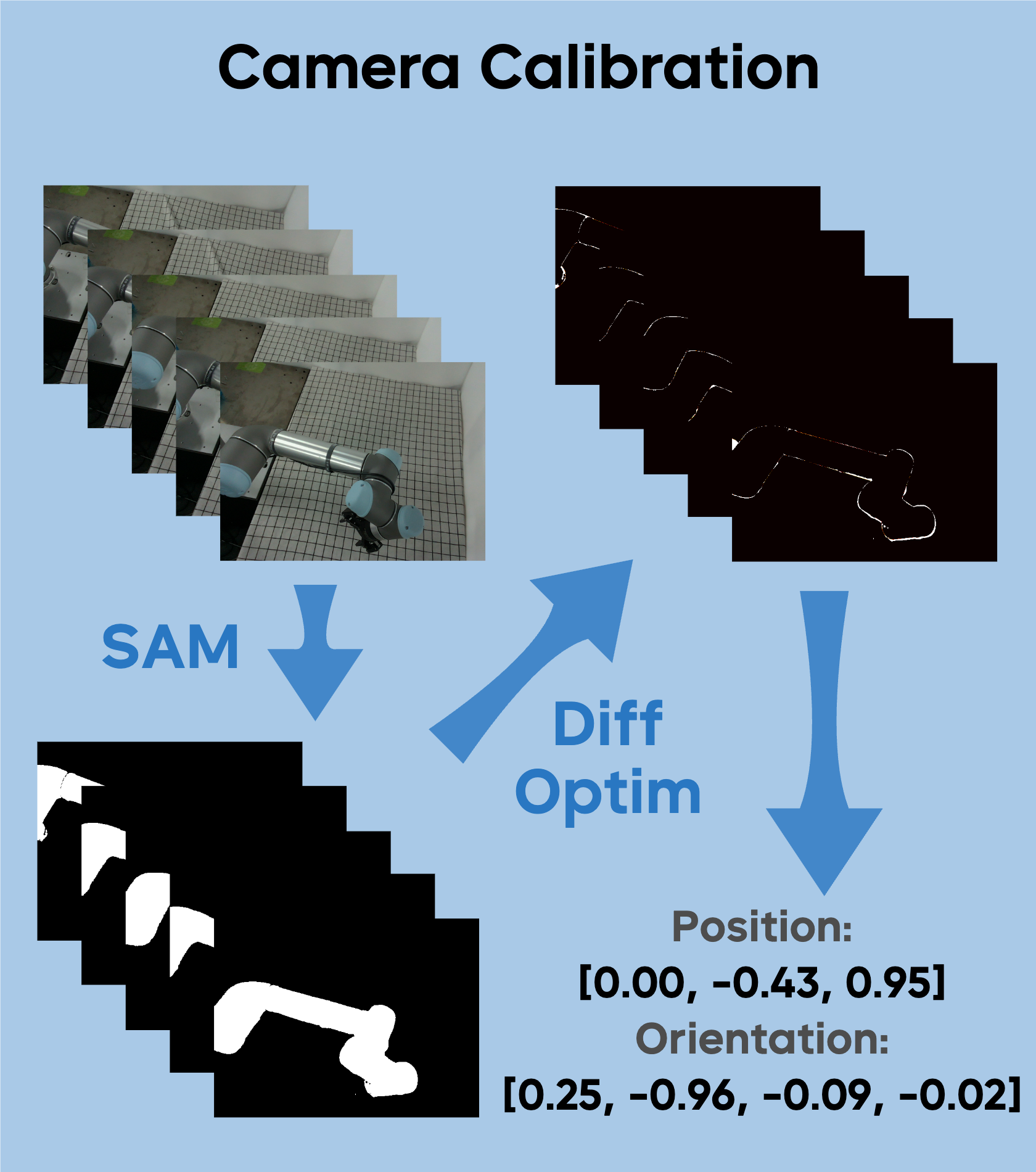

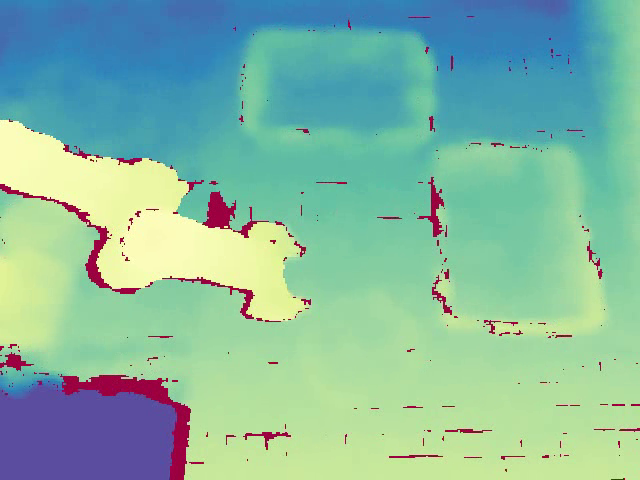

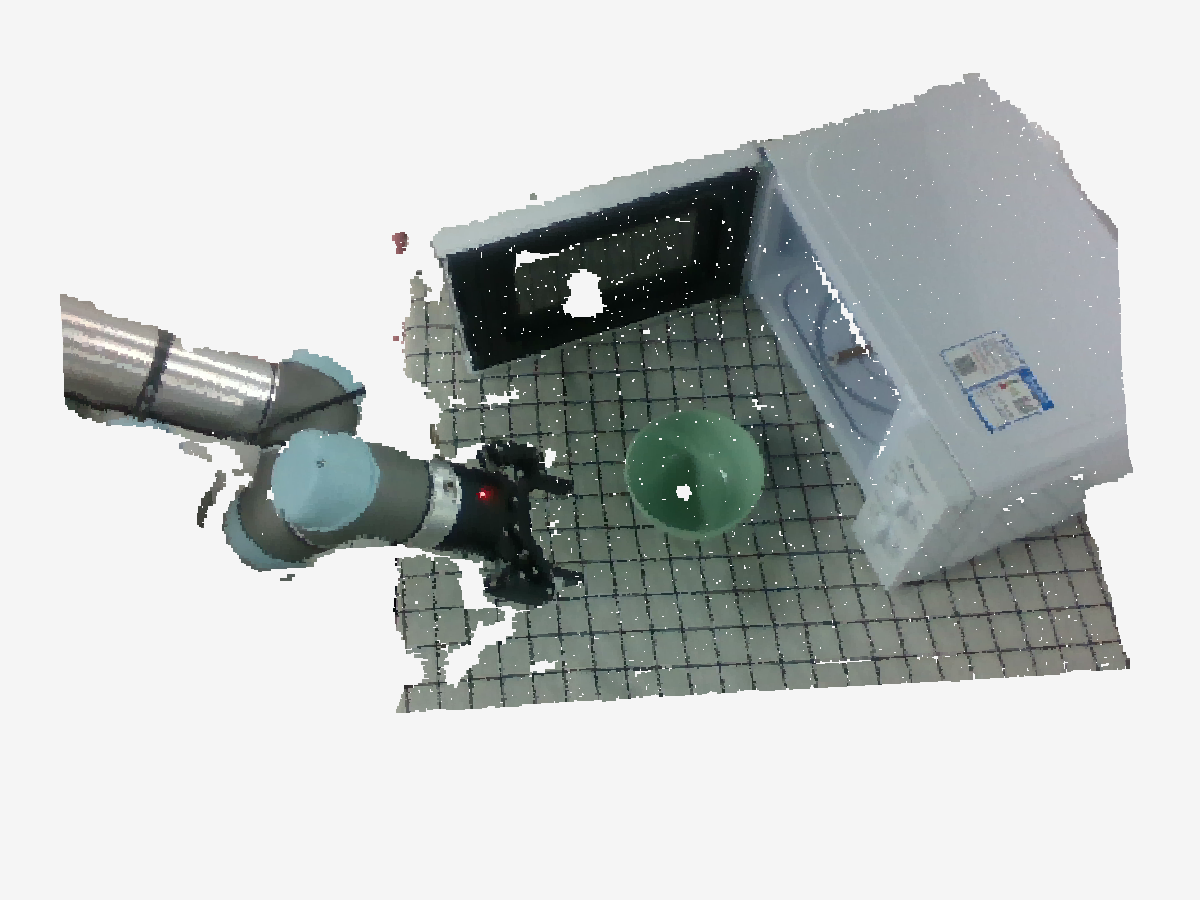

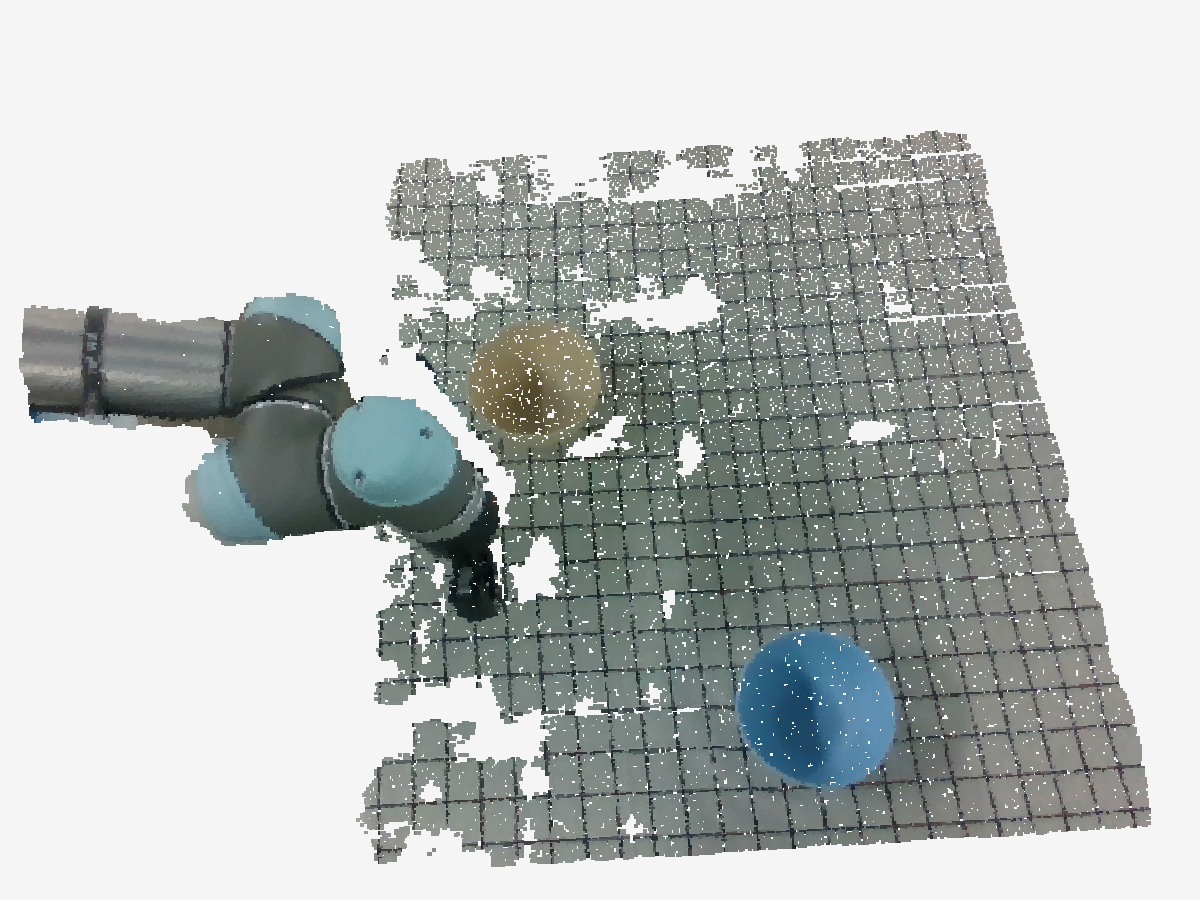

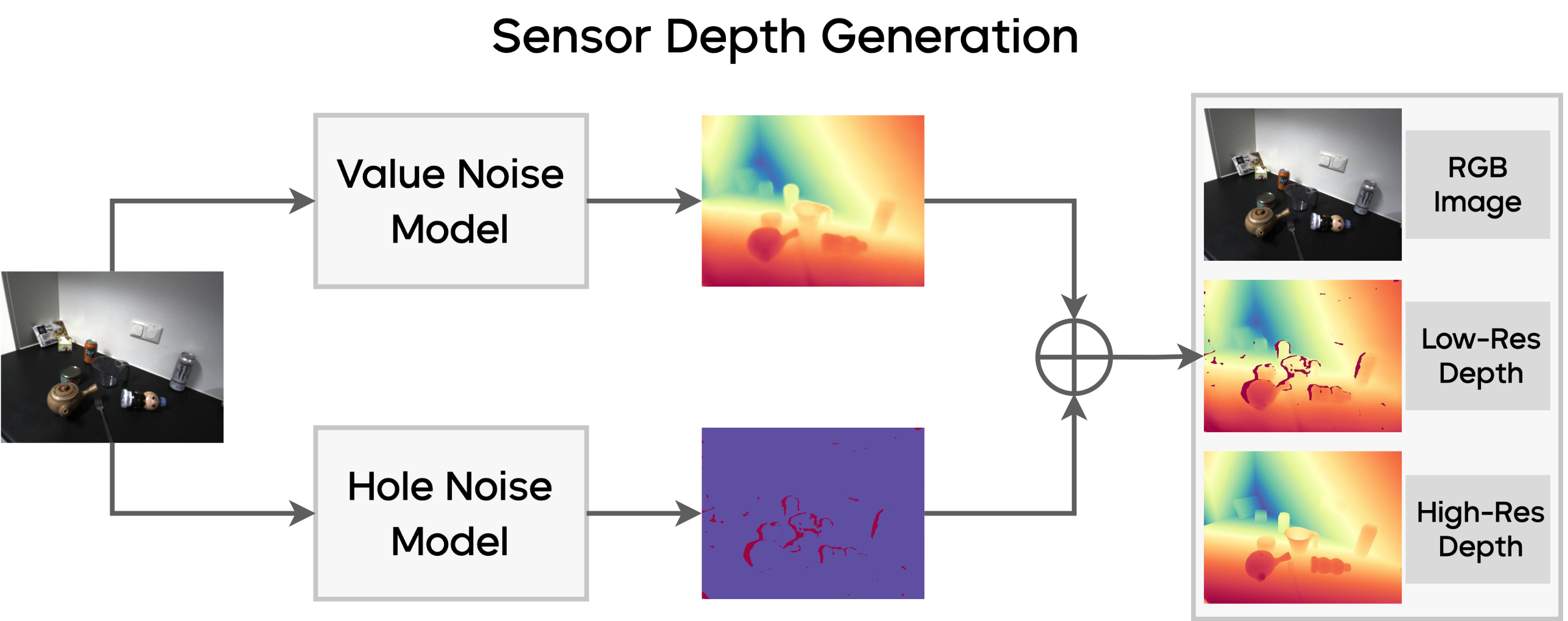

Manipulation as in Simulation:

Enabling Accurate Geometry Perception in Robots

*Equal Contribution †Corresponding Authors

Minghuan did this work when he was at ByteDance Seed.